Who am I?

tl;dr When I began to figure out what made me the person I am, in a professional capacity, it turned out that there are many important influences, so this exercise proved to be much more lengthy than anticipated. I hope that it contains enough interesting tidbits to make reading this post worthwhile. I operate from a different mindset, and have unique traits that have its own drawbacks and advantages which become evident while you follow my steps. Addendum April 2024: It turned out that I have a condition best described as zero autistic. It doesn't entail the irrational behaviour usually associated with autistic people, but makes you see much more detail. While this has drawbacks in real life, it makes you consider many more details where people usually say this is obvious and simple, it has many benefits for working on technical and scientific challenges, especially for someone trying to unriddle how intelligence truly works. If you read this post, remember this and enjoy seeing the world for a moment from a different point of view. While it may appear childish at first, it probably isn't.

This question has been asked so often recently, mostly by people in the games industry, HR, and managers that it somehow has become the theme of my last two months.

I always answered it with certainty, lead AI programmer, senior physics engineer, CTO for AI and physics, inventor who works on cutting-edge tech and wants to found a high-tech unicorn, but at some point I had to take a step back and reflect a bit.

What is truly me and what is an assumed role? It is a bit like the time-honoured adage, if we want to shape our future, we have to understand where we are coming from. This is particularly true if you are in a pivotal moment in your life.

Childhood perspective

As a child I always wanted to learn. I still get stories from my parents that I questioned everything, even why something was named a specific way. Primary school taught me something, not necessarily what I was interested in, but most importantly an essential skill: reading. In 1970s Germany in a village of about 2000 people we had a library bus catering to school children. I still remember fondly the illustrated books explaining the world, about nature, animals, plants, ecosystems, archeology, what we knew of previous ages like the dinosaurs, machines, agriculture, society, politics (in an idealized way), cars, aircraft, geography, geology, weather and much more. It helped me to grasp how the world works as a whole, and that everything is connected. It was all books, we didn't have the internet back then, I can only imagine if we had had the internet, it would have been exceptionally hard to unglue me from a PC, from all the knowledge I could have learned. But somehow having not all the details but a more broad picture stimulates the mind differently, and in hindsight it is not clear what is better.

I was very good at maths and still questioned everything. I didn't take what a teacher said for granted but always asked for evidence. Luckily for me maths was taught in a way that it always presented evidence, or a way to arrive logically at a conclusion, before giving you a formula for further use. Once you make this a habit, understanding all the parts, you gain superior insight and skills in possibly everything scientific. You understand what you know but you also understand what you don't know. The latter is essential to understand your limits and how to tackle these.

Somehow at secondary school, around 1980, I was already convinced that the human habit of not caring about the consequences of what you do, nor understanding it, was bound to doom us all sooner or later. It added some urgency to my quest to grasp how the world works as a whole, to identify the problems and find solutions.

My class was among the first to be taught how to program, voluntarily back then, only if you wanted to. I still remember our first lesson vividly, the teacher explaining to us first what an algorithm is, giving us examples of some pseudo code in natural language. It was simple, logically sound like maths. There were sequences of instructions to be performed, conditions like if .. then, recursions repeat .. until .., and it was intuitively clear that every instruction can be a placeholder for something much more sophisticated, like solve the equation represents a much more complex set of instructions. Somehow the natural language is a remarkably complete tool to describe any possible sequence of instructions, inclusive the means of abstraction. In this first hour I learned, once you imagine that you have a machine that can process these instructions, logically, faithfully or whatever you call it, these become more than words.

Immediately I understood that this is an exceptionally powerful tool with which you can change the world, with which you can solve problems you couldn't before. Before the lesson was over, excited as I was my mind had worked out my first algorithm which looked something like this:

Repeat

Learn how the world works;

Understand what you know and what you don't know;

Identify problems that need solving;

Understand the solutions to the problems;

Improve this algorithm to gain more insight;

Until all problems are identified and addressed.Little did I know about Turing machines, the limits of what is computable, automata theory or formal languages. Neither was I aware what barrier algorithms that modify themselves present nor that this short algorithm was the blueprint for a universal AI, something that I would tackle thirty years later. What I saw was a tool that allowed me to formulate a task, and that it needed two components: an interface to acquire insight and a machine to process it.

Small-mindedness is evil

In principle it was clear to me that my path is to study computer science. But first there were a couple of obstacles.

In Germany you had to take an Abitur exam at the end of your 13th year of school to be eligible to visit a university. For this you have to select four courses in which you will be tested, and one of these has to be from the field of arts and languages. For me this was arts and the teacher, who was from the Netherlands and had German as a second language and described himself as the descendant of slaves owned by the company Jacobs, totally failed to instill in me any sense why this endless rambling about the intentions of the artist, the perception of the audience and art styles merited my attention. I got in trouble over frequently not showing up for the Saturday lessons and the grades I got were bad. As you had to take these courses over two years, I had the opportunity to switch to another art course with a different teacher after one year. My grades improved significantly but I guess the damage was already done.

To pass the Abitur you had to take three exams in writing and one verbal exam, this was arts for me, and score at least a minimum of points (5 out of 15) in each. When the day of the verbal exam came, they revealed to me that the exam was conducted not by my current arts teacher, but by my previous one. The subject was a classic dutch painting and I was told to give my thoughts about it. For me this was an exercise in arbitrariness, but I obliged and answered the questions as best as I could. I was later informed that they gave me five points. As I had top grades in my scientific exams, I didn't worry about this at this point. But years later it dawned on me, that the arts teacher possibly wanted to award me even less points, which only came not to pass on intervention of my tutor, who was also my maths teacher and had this right. I have still nightmares about not passing the Abitur and not having had the opportunity to study computer science. In hindsight it seems to me that they wanted to teach me a lesson, which they did, but I am not sure that the lesson I learned is the one they intended.

After successfully passing the Abitur, I was called up to do compulsory military service for 15 months. The system is cleverly organised, you have little time to look into this beforehand and you are told that you have to do it. The time there was absolutely dull, but in the first three months you are drilled until you are tired in the evening and lose your drive. It establishes itself as a rhythm, which is hard to shake off even after the first three months. It makes you loose your edge in smart thinking, maths and analyzing. But somehow I began to question the order of things, that this whole exercise was about turning people into weapons and making them obey once politicians deem military confrontation inevitable. In Germany you have the right to be a conscientious objector and refuse to perform military service. So I did this while I was there, though this was a tough choice, because it would also mean that I would have to defer my study of computer science by another year, which I only avoided by a concatenation of lucky circumstances.

Where do I belong

Actually learning to program, the full methodology and science behind it, not just writing arbitrary programs in Pascal, was uplifting. Microcontroller lab where you program device I/O, learning assembler, writing a Modula parser in a four man team exercise are all activities I remember fondly. But if you really wanted to get deeper into programming, you needed to do more on your own. I got an Atari 1040ST, alongside the Amiga an ideal machine for a student at this time, and began to write assembler, C and later C++ programs. First as a hobby, a small tool to circumvent the copy protection on floppy disks, and later as a student working for one of the institutes of the university.

But I was also very good at algebra and theoretical computer science, automata theory and formal languages, and liked the solid foundation that the lectures on operating systems and algorithms taught. What I didn't like much was the teaching on databases, as these seemed way too arbitrary to me. Interestingly at this time neural networks became quickly a focus of interest, but after it was established that these are way too inefficient, these faded away as quickly as they came.

I spent roughly five years at university and once I had the feeling that I learned what I could learn here, I looked forward to apply my skills in practice.

Wildly ambitious

I had the opportunity to set up my own company right after university. This was a time where large strides were made towards building large inexpensive supercomputers from processors that work in parallel. The market leader was a company called Inmos that had previously produced a so called transputer, the T805. Essentially it was a high-performance CPU that had specific interfaces for communicating with other processors as well as for building large systems made up of many CPUs as well as memory chips, with the clou that the customers can design the architecture and size of the machine as they see fit. In theory you could give each transputer its own local memory chip, but you could also design large networks of transputers that share data smartly. It offered a high level of parallelization without memory bottlenecks, and from its architecture was way ahead of its time. At the time I founded Simulation Systems, Inmos had already produced samples of their new flagship transputer, the T9000, that exceeded the T805 in every aspect and was on par with the best Intel processors of its time. There were also already companies designing systems with it, like Parsytec.

The above picture is the cover of a detailed manual that describes the hardware architecture of the T9000 CPU as well as the diverse buses and companion accelerator and memory chips.

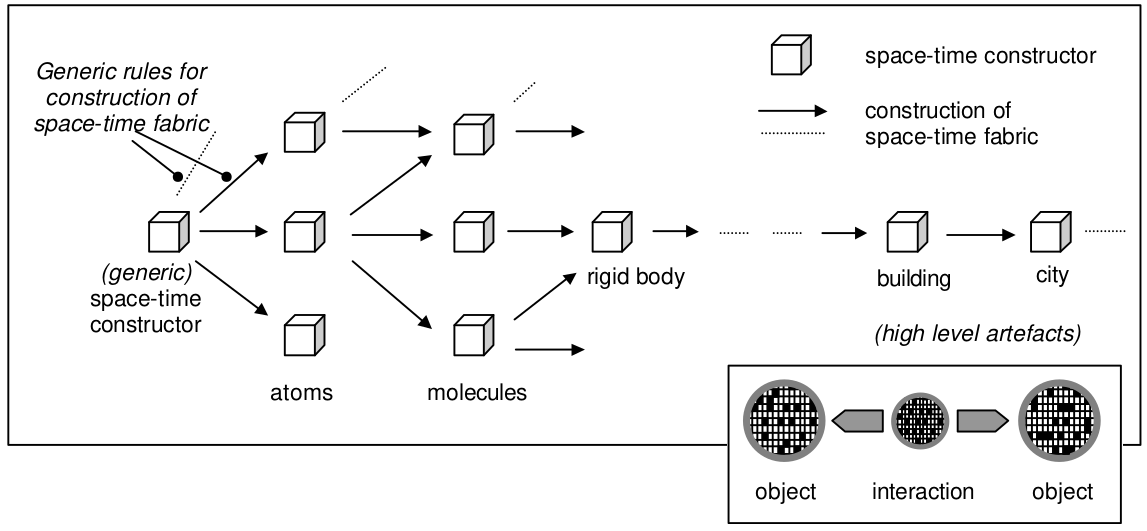

What I intended was to build a cost-efficient supercomputer, to have unmatched computing power to run the most sophisticated scientific simulation that had been attempted so far.

Sort of a machine that can change how science is done, with a simulation that offers new insights and allows us to make rapid progress in critical engineering fields. The plan was to simulate physics on the level of elementary particles, like electrons, protons, neutrons, and then scale the simulation up to predict the behaviour of atoms and molecules, and then scale this up to arrive at the properties of fluids, gases and rigid bodies. So that you essentially can make virtual experiments in space, i.e. vacuum and zero gravitation, under extreme pressure and so on without having to build extremely costly labs. My overriding idea was that with a machine like this, you can design a fusion reactor, what Iter is doing since 30 years, in a much shorter time frame. If this project would have been successful we would have abundant amounts of clean energy today.

As you can see in the picture of the processor manual above, at this time Inmos had already been acquired by the French IT giant SGS Thomson.

I was a young entrepreneur back then, and while I had a vision of the tech, and I believe the skills to make it a reality, I knew little about the real risks our corporate world harbors. Technically the project was wildly ambitious, the hardware was already fairly advanced and would have been manufactured by a strong partner, and my core competence was truly parallel computing, writing my own parallel operating system, and then developing an advanced scientific simulation application on top of that.

It never came to this because SGS Thomson decided that they are better off to manufacture other processors in the state-of-the-art fabs they had acquired from Inmos.

Reorientation

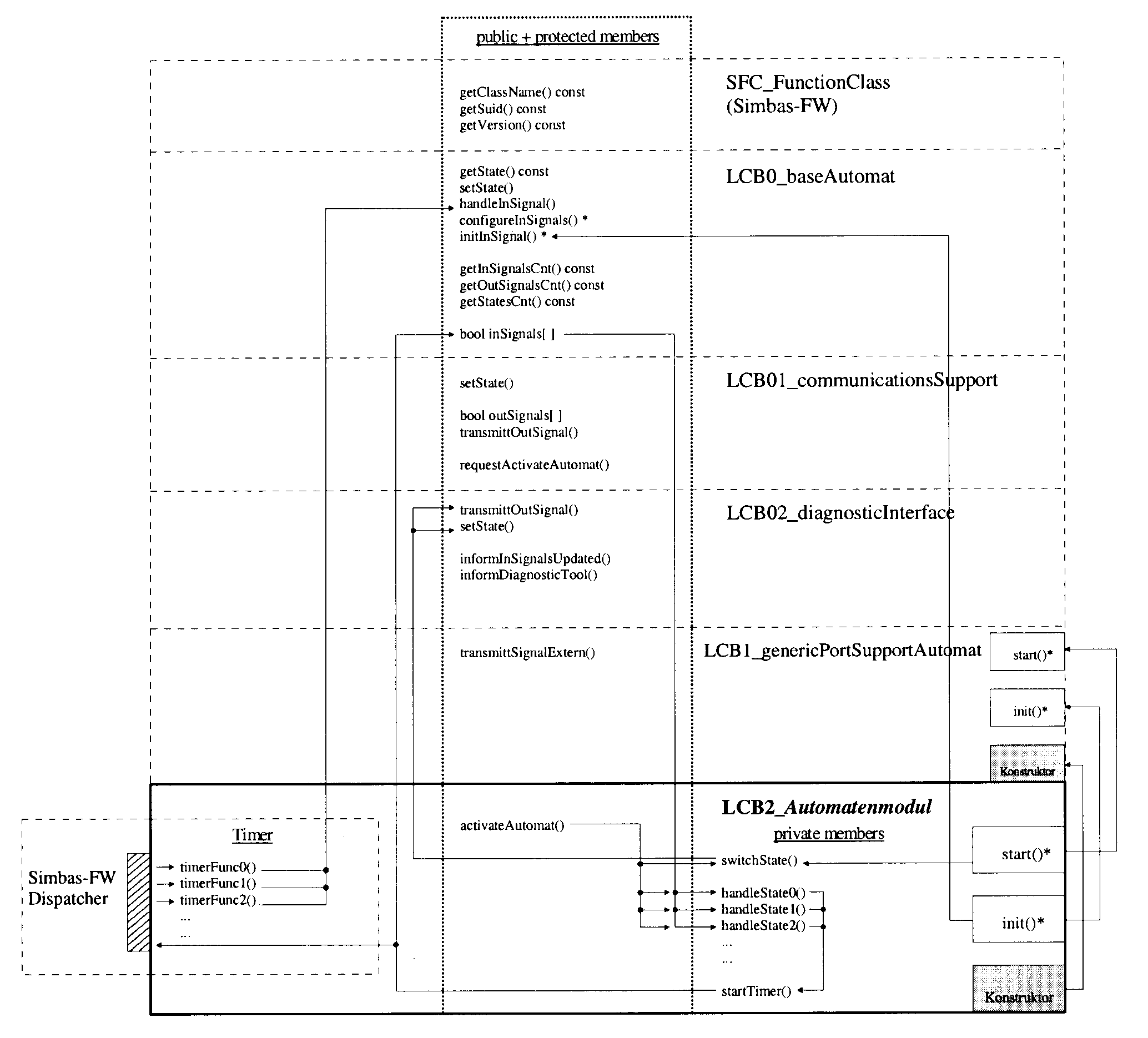

The question was what to do next. Working with Iter was one option, but I decided to work for a large engineering company in town, Siemens, who developed automatic railroad traffic control systems there. I was invited to an interview, but as these never sit well with me since my experience with the Abitur exam, I somehow must have objected to being asked all these questions. I remember that the hiring manager at one point said, please calm down, I just want to figure out what you can do. In the end they gave me a contract with good conditions, i.e. payed holidays, limited working hours, that paid 8K a month to develop software for them.

The product they worked on was relatively new, so they hadn't much code in place, and the first thing I did was to review their code in my spare time at home. It seemed to me a bit cumbersome, not efficient and not reliable enough as well as unduly complex which would make it more difficult to maintain over time. So I wrote a new version to demonstrate how it could be done instead. Not knowing anything about proper etiquette at that time I brought it to work and presented it to our manager to discuss improvements. On the next day I was called into the manager's office and told that they can't manage two branches of the software in parallel. But he said that they were throwing out what they had before and are going with the solution I had developed. So after a few weeks I was more or less in charge of the software architecture for their product. The work was smooth and after roughly six months we had a working and optimized prototype.

Of course there were also a few hiccups. When I included the hours I had worked on the prototype at home, their payroll manager was furious and accused me of fraud until our manager stepped in. The product we developed was part of a larger railroad traffic control framework and I discovered that their documentation was wrong and that the handshake between the two components could sometimes produce wrong results. This would only happen rarely, but it was of course the type of bug you absolutely don't want in a railroad traffic control system, a malfunction that was hard to track and unpredictable. As a result it was suggested that a colleague and I should work for a time with the department that developed the larger framework. It was in a neighboring building on the company's grounds and when we got there, the first thing we noted was that the air in the office was not good. We were told that the building had asbestos isolation, and though the company had permission to continue using the building they couldn't open the windows because that could allow asbestos into the office. My colleague and I were dubious about that and the idea was dropped. But of course it ruins the image of the corporation, like the proverbial greedy organisation that risks the health of its employees. The area they occupied there was possibly about 10 hectare, they had ample space.

At one point I asked for a pay rise, which was declined by the people in charge of this, but my manager suggested that I can bill as many hours as I want and he would back this. In hindsight I believe I didn't give it thorough enough thought, maybe because of the incidents with the asbestos and the accusations, but it was a good position that would be very well paid. I made sure that they had a successor for me and helped two new developers to understand the ins and outs of the software, but then I left. What I had gained was an in-depth insight how large corporations organize themselves, which in many ways was not what you expect.

HR and learning organisations

I was offered the opportunity to work on a study into how digital and online learning transforms organisations. It wasn't working on tech for once, but the work could broaden my background consideribly. When I tentatively asked, I need at least 75 DM per hour, the owner answered that he gives me 80. As this was work on contract, I was eligible for a generous public funding for people establishing a new startup, based on your previous salary, which amounted to something like 5K a month for a year on top of what you actually earn, so the pay was considerably higher than at Siemens without the extra hours.

The work itself was to figure out how a large sampling of successful companies uses digital technology to empower their employees and to change their operations to become learning organisations, i.e. companies that constantly adapt because their employees are aware of the market and changing trends and acquire necessary new skills quickly. Technically the study was conducted for RWE, a very successful German multinational, and one of their directors had countless contacts to many successful companies in Germany and provided me with recent, inside, in-depth material to grasp what challenges HR is facing and how they go about making learning material and tools available to their employees. My task was to structure all the sources, categorize, evaluate cause and effect and best practices, success factors and so on. This was in 1999 and online learning as well as multimedia learning, i.e. courses that you load from a CD, were relatively new. My main conclusion was that all learning occured in a social interaction space, partly digital and partly real world, and that the biggest success factor is how well the different parts are integrated, i.e. real world and market data, direct customer feedback, the recognition of the need for new products and skills, the creation of learning materials, and making it available to employees. Critical for success is the motivation of the learners, so social interaction in a larger sense is paramount for long-term success. For this time it was a remarkable conclusion, fast forward 20 years, this is the world we are living in today.

I learned a lot about HR, its critical role for the long-term success of a corporation, and of course that HR also can fall into a rut that makes companies stagnant in the long run. As an entrepreneur it also gave me a vision how to structure a company that it continuously learns to become an intelligent entity that adapts to the market and changing needs.

Once this study was complete, I was offered a new one, but I missed the work on real tech.

Full-stack development

With the digitalisation progressing during the dotcom boom, companies emerged that offered high-level services to other companies, like the Carrots AG in Cologne whose premium product was a complete event roadmap, inclusively organising these events, for a company's stock market introduction, in short IPO. During the interview I was accompanied by a head hunter and she did most of the talk while I only had to add some perspective from time to time. Which worked. They offered me a contract worth 65K for 500 hours each, which I typically work in ten weeks.

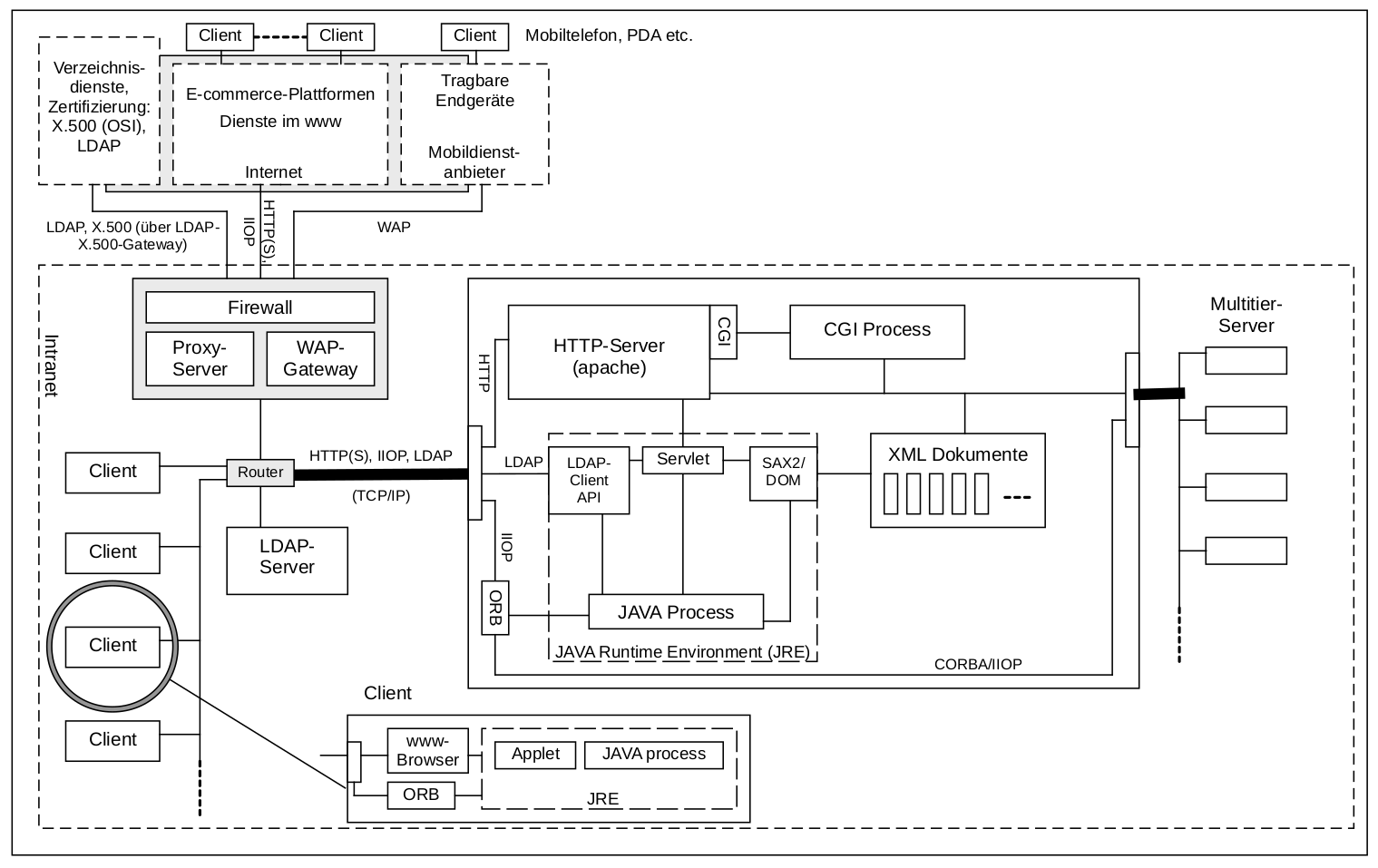

The work involved developing an online platform for the company to provide their services to their clients, inclusively the management of the events and communication between all parties.

The challenge was to create a platform that is versatile enough to meet all current needs, but it would be much better to design it in a way that it can grow with a further evolving internet. To solve this I developed an understanding of all the functional blocks needed to deliver its services and to interface with the backend and clients. There are usually many tools to choose from, but my assessment was that using established standards and robust proven open source solutions for each function block will give us a maximum of versatility and control, that is ownership, of the platform. Interestingly it turned out that there existed such an option for each vital function block, and that the work implementing such a system wouldn't exceed what you spend using commercial 3rd party solutions.

It was a diverse company with people of many different skills working together to provide a spectrum of event services. It was the first time I worked with internet visionaries, who didn't understand the tech in detail but developed a vision of what they would want to achieve in terms of interacting with and across the internet. It led to interesting discussions and I cherished this.

After roughly six months I had a meeting with the owner and the chief visionary about becoming the CTO of the company. Aside from the general issues I have with such interviews, always feeling like being on the backfeet and expected to talk when I rather would consider the implications carefully, I also find it difficult to grasp the negotiation space, i.e. what you have to offer and consider. It is like such interviews are simply not compatible with my mode of thinking, and so the meeting didn't produce any result.

But it didn't make a difference, as this was on the height of the dotcom crash, IPOs didn't happen at this time, and the financial strain on the company was too much.

Physics and abstraction

Pause. The chief visionary had a friend who was the son of one of the founders of these large Japanese multinationals but who had come to Germany to make his career on his own independently. He had outstanding management talent and is today one of the top managers at Sony. The three of us formed a team, a top manager, a visionary and a tech guru, to found a company called Xynesis.

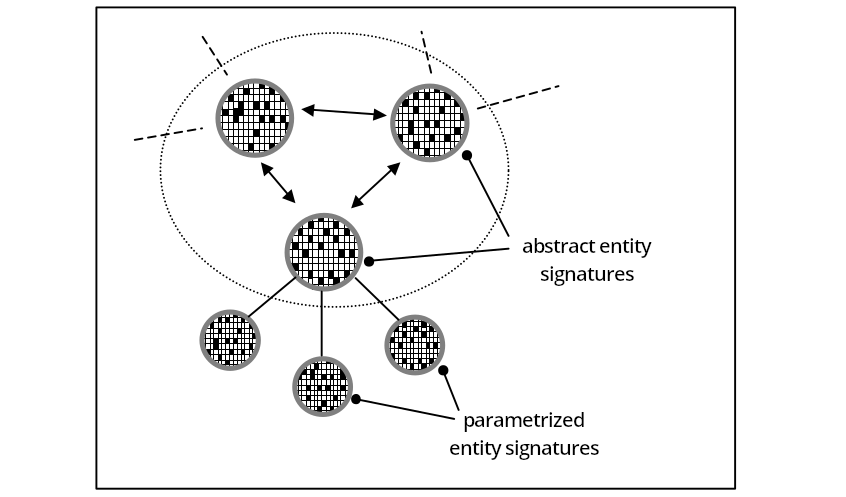

My idea was to create a physics engine, which can do what Havok can do but expand it significantly by solving how abstraction can be implemented, i.e. by simulating entities that consist of many component entities in an adaptive way much more efficiently, and thus open up virtual worlds with much more detail, functionality and performance, similar to the goals of the UE5's Nanite and Lumen today, but more fundamental in its approach. It was the technology I had developed for Simulation Systems earlier. The business case was to develop the engine and build a video game with it.

This was on the height of the dotcom crash though, and it was exceptionally hard to find the needed capital, though we had good contacts. The answer that we usually got was, a year earlier it would have been greenlit easily but now they are not in a position to invest.

Eventually we needed to move on, though I was too fascinated to give up on the prospect. During the 90s I had begun to play PC games, initially Wing Commander and Starflight, Civilization and Master of Orion II. It was highly interesting as the games evolved with the progress in technology all the time, as if video games embodied the spirit of pushing the edge of what you can do. At the end of the 90s we had games like Baldur's Gate, Heroes of Might & Magic III, Homeworld and Deus Ex. It was an incredible time for fans and you always scoured the gaming magazines excitedly for news on the next big title, and the sometimes miraculous surprise to appear on the horizon.

I wrote a demo and applied with it at Relic Entertainment, the developers of Homeworld who were still independent back then. What I got was an invitation for a phone interview, but I found it impossible to navigate because English being my second language compounded the difficulties I had with interviews already.

Developing the tech for an engine with built-in abstraction was challenging and it was doubtful that I could complete it alone. But a proof of concept with a demo that showed that it is useful for a game like Homeworld would be a big step already. So I set out to work on the tech and in 2006 moved to Vancouver, which had at this time a vibrant community of developers, one of them being Relic Entertainment.

But by a stroke of luck I somehow got the opportunity to work on Heroes of Might & Magic V and was tasked to upgrade their AI. HoMM V in short is a complex turn-based strategy game with a vast number of rules that define how the game world interacts. Fortunately the source code of HoMM V was based on the source code of the very successful HoMM III which was developed by the series original creator, New World Computing. Moreover that particular installment's AI was sort of the best of its kind in the entire industry, and for a tech guru like me who grasps concepts quickly an ideal introduction into how to build advanced AI for turn-based games. It is a little known fact that the AI for turn-based games is much more demanding to write because the players can examine the AI's reactions in minute detail and figure out exploits. So it has to be robust and do a realistic lookahead, whereas in real-time games every interaction is embedded in a constant flow of events that happen in the game continuously.

Half marathons

Technically I offered them that I improve the AI considerably, after having pointed out the weaknesses their current implementation had. I also said that I am very good at analyzing C++ and that I could start working quickly with a dump of the source code. So this is what I got and the onus was on me to prove what I can do.

By the time I had to wrap up my work, I had made the pathfinder a hundred times faster, introduced a smart lookahead technique called event sequencing, upgraded the internal map format, implemented many techniques to make variant prediction substantially faster and more smart, and refactored the AI to run in a separate process in parallel, so that the AI could process 100 million paths per second instead of previously 100,000. The paths were also much longer, the selection was smarter, and the AI could do a lookahead of seven turns instead of three. If it were a chess computer its playing strength would have made a major leap forward. You could also observe the progress in how the AI was going about its tasks on the map much more smartly. IMHO HoMM is a substantially more versatile game than chess and demands more of the player, as explained in my post on strategic planning that refers to Luděk Pachman's books about chess, and thus the AI needs to do more long-term strategic planning, i.e. understanding the big picture properly, so it is not exactly right to measure the progress in lookahead.

All in all I completed a mountain of work in somewhat more than six months, and learned a ton about the design of the arguably best AI for a strategy game so far, this is partly the reason why HoMM III is an evergreen that still makes it into the top 10 best selling games on GOG, and how to upgrade it in every respect.

There was one snag though, just when our producer had publicly announced that there was a major surprise for the community forthcoming, Ubisoft (the publisher) and Nival (the developer) severed their cooperation, so that the upgrade couldn't be published. Instead I published it myself as an unofficial mod with Ubisoft's permission two years later.

AI and physics

Coming back to what I did earlier, under the subheading Physics and abstraction, my work on AI changed my perception of the fields involved. Whereas I had earlier seen abstraction as an extension of physical laws and aggregation into higher-level entities, now I recognized that the vast spaces of possible interactions and variants, which play a central role in AI lookahead, have their own laws that are also fundamental to how physical forces work and concrete spacetime and event horizons manifest themselves. A bit like Stephen Hawking's admission that his A Brief History of Time is wrong, and that the universe is much more like a self-organising entity in which the laws of physics are embedded.

After the work for Ubisoft was finished and I recognized that this tech had an enormous potential, both as a research subject and an engine, it made sense to me to continue working in this direction.

In a way HoMM provided an ideal test bed to research AI, because it is a mostly complete military simulation with different unit and weapon types, e.g. air, land, ranged, close quarters, fortifications, terrain types that affect movement, resource management, building up castles and recruiting units, special skills, units gaining experience, a morale system, and more that requires sound logistics and rewards smart strategic thinking. The situations you find yourself in are mostly always unique and differ in many respects. Sometimes resources are scarce, sometimes guardians impair your progress, and enemy factions pursue their own plans. Essentially there are all kinds of feedback systems that depend on each other and finding a good plan isn't straightforward.

I built a number of prototypes, each technically more advanced and improving the way variants are processed and outcomes predicted, but in principle you run always into the same problems, or possibly more accurate, encounter the same limitations. If you determine the strategic value of a variant it usually depends on the context which in turn depends on what the opposition is doing who process their own variants that depend on what you are doing, which leads to ever more processing. Not so much because you can't resolve the recursive dependency, but mostly because small nuances can become decisive and it is hard to track all the dependencies.

It requires a fundamentally different approach to solve this and order the required processing efficiently. Interestingly you face the same dilemma in quantum mechanics, the more complex the systems become the harder to predict their behaviour. If you look at large open systems with countless entities, both quantum mechanics and AI have to deal with vast interaction spaces in which possible events can occur in all kinds of order. The tools we have to deal with this are limited, so I had to embark on fundamentally new research to break new ground. You have to learn to think differently, which is time consuming and requires many transformative quantum leaps of the mind.

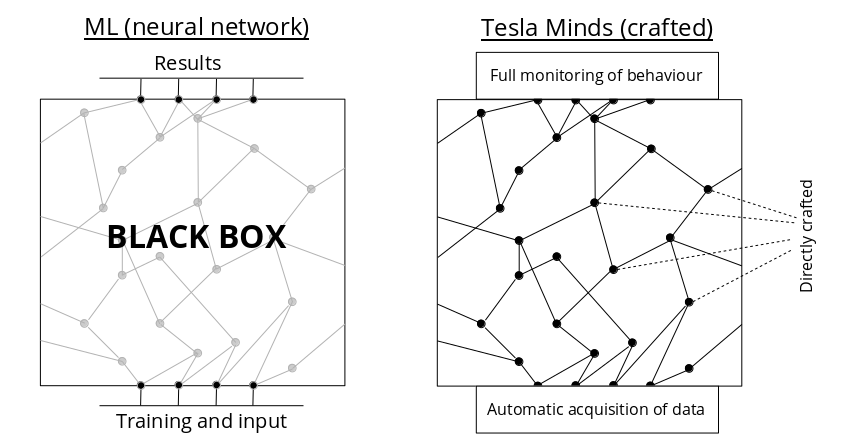

Neural networks, i.e. machine learning (ML), are hopelessly inefficient and require large amounts of training which severely limits the number of parameters that it can work with reliably. But ML's biggest drawback is that we don't understand how it arrives at a certain result, that its core is a black box, and that we can't be certain about the quality of the result. However, its mode of operation is in principle desirable in that it can assess something without being held back by the recursive dependencies described above.

What I have researched is in principle how this desirable trait of ML can be implemented directly by AI nodes that we fully control. Or you could say, it is no longer a black box, we now can craft much more efficient systems directly. This has many game-changing benefits: it is exponentially faster; it doesn't need training; we can monitor the AI simulation in detail and understand what triggers what; we can implement hybrid systems that ensure critical functions always work, e.g. safety limits are observed; AI applications can have unlimited functionality/complexity; results are accurate and reliable.

Showcase

That is technically where I am right now. I am open to prove the technology together with a partner, in any showcase that is suitable.

I am also developing two showcases myself. The first one, as you can guess, is finally an ultimate versatile AI for the HoMM V that I set out to develop many years before. It is a reasonably complete military simulation, so it can be scrutinized in many possible ways. The second one is a sophisticated AI/physics engine for a sci-fi strategy game built with the UE5. This isn't set in stone, and another showcase can take its place.

This brings me back to the original question I asked in the beginning. It is closely related to what route should I take.

After this exercise I would say that my role is CTO for AI and physics, but more general I am a tech guru who can take any tech task and morph it into something bigger, something more innovative and ambitious.

Which means I am open to work for a company in any suitable tech role, AI programmer, physics engineer, engine programmer, CTO, or some advanced C++ UE5 task. What I ask for is that I can excel at the task, to make a project better than expected, sort of a showcase for my skills, it doesn't need to be AI. Such a project can take two or three years, and if there is a perspective with the company to build something bigger after this, and capitalize on it, it can take longer. Why does it make sense? A showcase I build on my own will take equally long, comes with its own risks, and needs capital. I will always have my HoMM V showcase, it is mostly done, and if the AI is as good as I believe, financially it wouldn't make much of a difference whether I set up my own company in three years or right of the bat with much bigger tradeoffs in negotiations with financiers.

There is something else I came across recently, Shawn Foust's excellent post that describes how people in the games industry are selected. It is a brilliant analysis that predominantly talent is hired who are very good at presenting subjects and themselves, not necessarily outstanding with the tech itself. And that these people are then involved in hiring the next employees, looking for the same traits. In short it is a self-reinforcing trend that can harm a company's ability to innovate, because this trait is not necessarily looked for. In a way it helps to explain why studios over time loose their innovative edge. But it also has the consequence that outstanding tech talent who cannot present well is deemed a misfit in such a culture. Addressing this issue has many benefits for a company including a transparent and open communication culture that enables feedback and input from people who are more thinkers than talkers. It was a relief for me to understand that not being good at interviews isn't a deep personal flaw. It is not compatible with my mode of thinking systematically and methodically, as this needs time, but I am good at other things because of it.

What you get with me is someone who is outstandingly good with tech, to grasp its entire scope and to find innovative solutions, and driven to make the best of it. A bit like Thomas Tuchel, who is sometimes a bit of a lunatic, but always on mission and delivers incredibly dedicated work. He always asks to be involved with all processes that impact on his work, which sometimes caused trouble. But in studios developing software asking for feedback from all parties should be the norm, as this is what Scrum implies, a learning organisation. Even if I work for you a limited time, there is so much that you can gain from my involvement.